Experiment

We use a joint EEG-fMRI dataset originally recorded with the intent to explore the effects of music on the emotions of the listener. The full details of the experiments are described elsewhere in Ref.59 and the data is publicly available60,61. We briefly summarise the key details of the dataset below.

Outline

Participants were asked to listen to two different sets of music. The first set comprised a collection of generated pieces of piano music, which had been generated to target specific affective states and was pre-calibrated to ensure they could induce the targeted affects in their listeners. The second set of music was a set of pre-existing classical piano music pieces, which were chosen for their ability to induce a wide range of different affects.

In this study we only make use of the neural data recorded during the generated music listening task. As detailed below, participants listened to a series of pieces of music over different types of trials. In some trials participants were asked to continuously report their emotions, while in others they were asked to just listen to the music.

Participants

A total of 21 healthy adults participated in the study. All participants were aged between 20 and 30 years old and were right-handed with normal or corrected to normal vision and normal hearing. All participants were screened to ensure they could safely participate in a joint EEG-fMRI study. Ten of the participants were female. All participants received £20.00 (GBP) for their participation.

Ethics

Ethical permission was granted for the study by the University of Reading research ethics committee, where the study was conducted. All experimental protocols and methods were carried out in accordance with relevant ethical guidelines. Informed consent was obtained from all participants.

fMRI

Functional magnetic resonance imaging (fMRI) was recorded using a 3 Tesla Siemans Magnetom Trio scanner with Syngo software (version MR B17) and a 37-channel head coil. The scanning sequence used comprised a gradient echo planar localizer sequence followed by an anatomical scan (field of view: 256 \(\times\) 256 \(\times\) 176 voxels, TR = 2020 ms, TE = 2.9 ms, dimensions of voxels = 0.9766 \(\times\) 0.9766 \(\times\) 1 mm, flip angle = 9°). This was then followed by a set of gradient echo planar functional sequences (TR = 2000 ms, echo time = 30 ms, field of view = 64 \(\times\) 64 \(\times\) 37 voxels, voxel dimensions = 3 \(\times\) 3 \(\times\) 3.75 mm, flip angle = 90°). The final sequence, applied after the music listening part of the experiment was completed, was another gradient echo planar sequence.

EEG

EEG was recorded via an MRI-compatible BrainAmp MR and BrainCap MR EEG system (BrainProducts Inc., Germany). EEG was recorded from 32 channels (31 channels for EEG and 1 channel for electrocardiogram) at a sample rate of 5000 Hz without filtering and with an amplitude resolution of 0.5 \(\upmu\)V. A reference channel was placed at position FCz on the international 10/20 system for electrode placement and all impedance’s on all channels were kept below 15 k\(\Omega\) throughout the experiment.

Co-registration of the timing of the EEG and fMRI recordings was achieved by a combination of the BrainVision recording software (BrainProducts, Germany), which recorded trigger signals from the MRI scanner, and custom written stimuli presentation software written in Matlab (Mathworks, USA) with Psychtoolbox62.

Stimuli

The music played to the participants was generated with the intention of inducing a wide range of different affective states. In total 36 different musical pieces were generated to target 9 different affective states (combinations of high, neutral, and low valence and arousal).

Each piece of music was 40 s long and was generated by an affectively driven algorithm composition system that was based on an artificial neural network63 that had been previously validated on an independent pool of participants64. The resulting music was a piece of mono-phonic piano music as played by a single player.

Tasks

The experiment was divided into a series of individual tasks for the participants to complete. These tasks fell into three different types:

-

1.

Music only trials In these trials participants were asked to just listen to a piece of music.

-

2.

Music reporting trials In these trials participants were asked to listen to a piece of music and, as they listened, to continuously report their current felt emotions on the valence-arousal circumplex65 via the FEELTRACE interface66.

-

3.

Reporting only trials These trials were used to control for effects of motor control of the FEELTRACE interface. Participants were shown, on screen, a recording of a previous report they had made with FEELTRACE and were asked to reproduce their recorded movements as accurately as they could. No music was played during these trials.

Within each trial participants were first presented with a fixation cross, which was shown on screen for 1–3 s with a random, uniformly drawn, duration. The task then took 40 s to complete and was then followed by a short 0.5 s break.

All sound was presented to participants via MRI-compatible headphones (NordicNeurolab, Norway). Participants also wore ear-plugs to protect their hearing and the volume levels of the music were adjusted to a comfortable level for each participant before the start of the experiment.

The trials were presented in a pseudo-random order and were split over 3 runs, each of which was approximately 10 min long. A 1-min break was given between each pair of runs and each run contained 12 trials in total.

Pre-processing

Both the EEG and the fMRI signals were pre-processed to remove artefacts and allow for further analysis.

fMRI

The fMRI data was pre-processed using SPM12 software67 running in Matlab 2018a.

Slice time correction was applied first, using the first slice of each run as the reference image. This was followed by removal of movement related artefacts from the images via a process of realignment and un-warping using the approach originally proposed by Friston et al.67. The field maps recorded during the scan sequences were used to correct for image warping effects and remove movement artefacts. A 4 mm separation was used with a Gaussian smoothing kernel of 5 mm. A 2nd degree spline interpolation was then used for realignment and a 4th degree spline interpolation for un-warping the images.

We then co-registered the functional scans against the high-resolution anatomical scan for each participant before normalising the functional scans to the high-resolution anatomical scan.

Finally, the functional scans were smoothed with a 7 mm Gaussian smoothing kernel and a 4th degree spline interpolation function.

EEG

The fMRI scanning process induces considerable artefacts in the EEG. To remove these mechanistic artefacts the Average Artefact Subtraction (AAS) algorithm was used68. The version of AAS implemented in the Vision Analyser software (BrainProducts, Germany) was used. The cleaned EEG was then visually checked to confirm that all the scanner artefacts had been removed.

Physiological artefacts were then manually removed from the signals. The EEG was first decomposed into statistically independent components (ICs) by application of second order blind identification (SOBI)69 a variant of independent component analysis that identifies a de-mixing matrix that maximises the statistical independence of the second order derivatives of the signals.

Each resulting IC was then manually inspected in the time, frequency, and spatial domains by a researcher with 10+ years experience in EEG artifact removal (author ID). Components that were judged to contain artefacts (physiological or otherwise) were manually removed before reconstruction of the cleaned EEG. A final visual inspection of the cleaned EEG was performed to confirm that the resulting signals are free from all types of artifact.

fMRI analysis

The fMRI dataset was used to identify voxels with activity that significantly differs between music listening (trials in which participants listen to music only and trials in which participants both listen to music and report their current emotions) and non-music listening trials (trials in which participants only use the FEELTRACE interface without hearing music).

Specifically, a general linear model was constructed for each participant and used to identify voxels that significantly differ (T-contrast) between these two conditions. Family-wise error rate was used to correct for multiple comparisons (correct p < 0.05). The resulting clusters of voxels were used to identify brain regions which exhibit activity that significantly co-varies with whether the participants were listening to music or not.

Source localisation

An fMRI-informed EEG source localisation approach was used to extract EEG features that are most likely to be informative for reconstruction of the music participants listened to from their neural data. To this end we first built a high resolution accurate conductivity model of the head. We then used a beam-former source reconstruction method, implemented in Fieldtrip70, to estimate the activity at a set of individual source locations in the brain. These source locations were chosen based on the fMRI analysis results on a per participant basis.

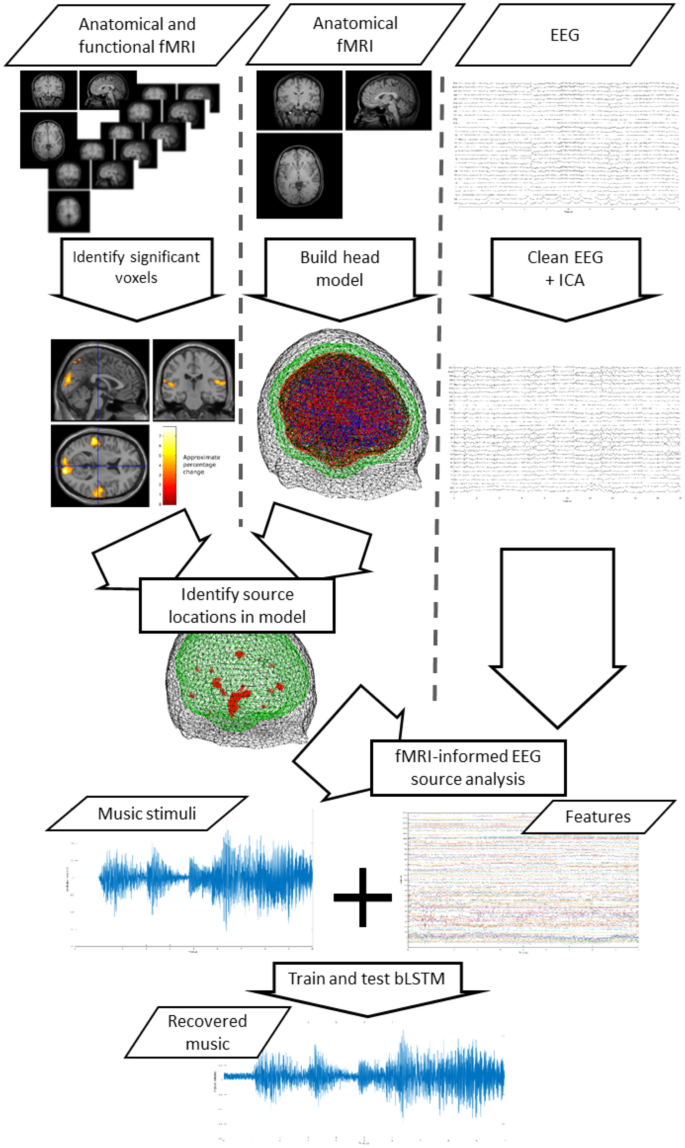

The entire process is illustrated in Fig. 6.

Analysis pipeline illustration. Anatomical MRI is used to construct head models, while fMRI is used to identify voxels that differ between music and no music conditions. EEG is decomposed via ICA and fMRI-informed source analysis is used to characterise activity at fMRI-identified locations. The resulting feature set is used to train a biLSTM to recover the music a participant listened to. A cross-fold train and validation scheme is used for each participant.

Model construction

A detailed head model was constructed for each participant to model conductivity within the head from each participant’s individual anatomical MRI scan. Fieldtrip was used to construct this model70.

The anatomical scan from each participant was first manually labelled to identify the positions of the nasion and the left and right pre-auricular points. The scan was then segmented into gray matter, white matter, cerebral spinal fluid, skull, and scalp tissue using the Fieldtrip toolbox70. Each segmentation was then used to construct a 3-dimensional mesh model out of sets of vertices (3000 vertices for the gray matter and the cerebral spinal fluid, 2000 vertices for each of the other segments). These mesh models were then used to create a conductivity model of the head via the finite element method71,72. We specified the conductivity of each layer using the following standardised values: gray matter \(=0.33\) S/m, white matter \(=0.14\) S/m, cerebral spinal fluid \(=1.79\) S/m, skull \(=0.01\) S/m, and scalp \(=0.43\) S/m. These values were chosen based on recommendations in71,73,74.

The EEG channel locations were then manually fitted to the model by a process of successive rotations, translations, and visual inspection. Finally, a lead-field model of the dipole locations inside the conductivity model was computed from a grid of 1.5 \(\times\) 1.5 \(\times\) 1.5 cm voxels.

Source estimation

Source estimation was achieved by using the conductivity head model and the eLoreta source reconstruction method75,76 to estimate the electro-physiological activity at specific voxel locations within the head model. Specifically, voxel locations in the model were chosen based on the results of analysis of the fMRI datasets (see the “fMRI analysis” section).

From the set of voxels that were identified, via the GLM, as containing activity that significantly differs between the music and no music conditions a sub-set of voxel cluster centres were identified as follows.

-

1.

Begin with an empty set of voxel cluster locations V and a set of candidate voxels C, which contain all the voxels identified via our GLM-based fMRI analysis as significantly differing between the music and no music trials.

-

2.

Identify the voxel with the largest T-value (i.e. the voxel that has the largest difference in variance between the music and no music conditions).

-

3.

Measure the Euclidean distance between the spatial location of this voxel in the head and all voxels currently in the set V. If the smallest distance is greater than our minimum distance m add it to the set V.

-

4.

Remove the candidate voxel from the set C.

-

5.

Repeat steps 2–4 until the set V contains \(n_l\) voxels.

This process ensures that we select a sub-set of voxel locations that differentiate the music and no music trials, while ensuring this set of voxels are spatially distinct from one another. This results in a set of \(n_l\) voxel locations that characterise the distributed network of brain regions involved in music listening. In our implementation we set the minimum distance m = 3 cm and \(n_l\) = 4 voxel locations.

Feature set construction

To extract a set of features from the EEG to use for reconstructing the music played to participants we first use independent component analysis (ICA) to separate the EEG into statistically independent components. Each independent component is then projected back to the EEG electrodes by multiplying the component by the inverse of the de-mixing matrix identified by the ICA algorithm. This gives an estimate of the EEG signals on each channel if only that independent component were present.

This IC projection is then used, along with the pre-calculated head model for the participant, to estimate the source activity at each of the \(n_l\) = 4 locations identified by our source estimation algorithm (see “Source estimation” section). This results in a matrix of 4 \(\times N_s\) sources for each IC projection, where \(N_s\) denotes the total number of samples in the recorded EEG signal set. These matrices are generated for each IC projection and concatenated together to form a feature matrix of dimensions \((4 \times M) \times N_s\), where M denotes the number of EEG channels (31 in our experiment). Thus, our final feature vector is a matrix of EEG source projections of dimensions \(124 \times N_s\).

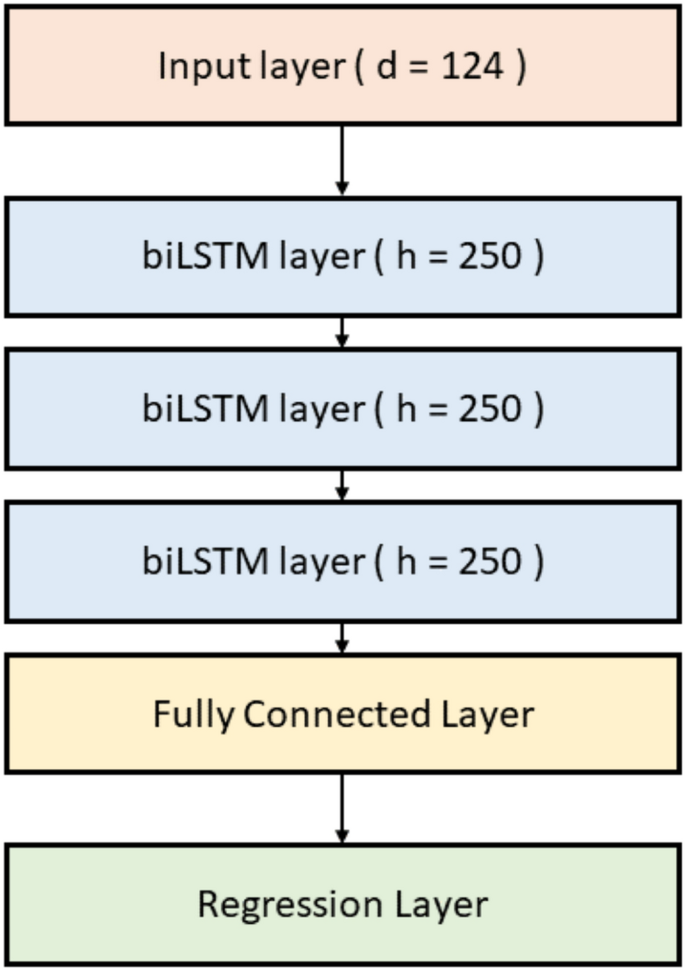

Music prediction

Reconstruction of the music participants heard from fMRI-informed EEG sources is attempted via a deep neural network. Specifically, a stacked 4-layer bi-directional long short-term memory (biLSTM) network is constructed. The first layer is a sequence input layer with the same number of inputs as features (124). Four biLSTM layers are then stacked, each with 250 hidden units. A 1-layer fully connected layer is then added to the stack, followed by a regression layer. The architecture of the biLSTM network is illustrated in Fig. 7.

Architecture of the biLSTM used to attempt to recover heard music from our fMRI-informed EEG source analysis.

The music played to each participant is down-sampled to the same sample rate as the EEG (1000 Hz). Both the music and the feature vector (see the “Feature set construction” section) are then further down-sampled by a factor of 10 from 1000 to 100 Hz.

The network is trained and tested to predict this music from the EEG sources within a 3 \(\times\) 3 cross-fold train and test scheme. Specifically, each run of the 3 runs from the experiment is used once as the test set in each fold. The training and testing data comprise the time series of all EEG sample points and music samples from all time points when the participants listened to music (trial types 1 and 2, see the experiment description above) within each run.

Statistical analysis

We evaluate the performance of our decoding model in several ways.

First, we compare the time series of the reconstructed music with the original music played to the participants via visual inspection and via a correlation analysis in both the time and frequency domains. Specifically, the Pearson’s correlation coefficient between the original and reconstructed music (downsampled to 100 Hz), in the time domain is measured. We then compare the power spectra of the original and reconstructed music via Pearson’s correlation coefficient. We also measure the structural similarity38 of the time–frequency spectrograms between the original and reconstructed music.

For each of these indices of similarity between the original and reconstructed music we measure the statistical significance via a bootstrapping approach. We first generate sets of reconstructed music under the null hypothesis that the reconstructed music is not related to the original music stimuli by shuffling the order of the reconstructed music trials. We repeat this 4000 times for each similarity measure (correlation coefficients and structural similarity) and measure the similarity between the original music and the shuffled reconstructed music in each case in order to generate null distributions. The probability that the measured similarity between the original music and the un-shuffled reconstructed music is drawn from this null distribution is then measured in order to estimate the statistical significance of the similarity measures.

Second, we use the reconstructed music to attempt to identify which piece of music a participant was listening to within each trial. If the decoding model is able to reconstruct a reasonable approximation of the original music then it should be possible to use this reconstructed music to identify which specific piece of music a participant was listening to in each trial.

Specifically, we first z-score the decoded and original music time series in order to remove any differences in amplitude scaling. We then band-pass filter both signals in the range 0.035 Hz to 4.75 Hz. These parameters were chosen to preserve the visually apparent similarities in the amplitude envelopes of the original and decoded music, which were observed upon visually inspecting a subset of the data (participants 1 and 2).

We then segmented the signals into individual trials as defined by the original experiment. Specifically, each trial is 40 s long and comprises a single piece of music. For a given trial the structural similarity is measured between the spectra of the original music played to the participant in that trial and the spectra of the reconstructed music. The structural similarity is then also measured between the time-frequency spectra of the reconstructed music for that same trial and the time–frequency spectra of the original music played to the participant in all the other trials in which the participant heard a different piece of music. Specifically, we measure

$$\begin{aligned} C_{k,k} = \text{ ssim }( R_k, M_k ), \end{aligned}$$

(1)

and

$$\begin{aligned} C_{k,i} = \text{ ssim }( R_k, M_i )~~~~~~~~\forall \ i \in A, \end{aligned}$$

(2)

where \(R_k\) denotes the time–frequency spectrogram of the reconstructed music for trial k, \(M_i\) denotes the time–frequency spectrogram of the original music played to the participant in trial i, and \(\text{ ssim }\) indicates the use of the structural similarity measure. For a given trial k the value of \(C_{k,i}\) is measured for all trials in the set A (\(i \in A\)), where A is defined as

$$\begin{aligned} A = \{1,…N_t\} \setminus k, \end{aligned}$$

(3)

and denotes the complement of set of all trials \(1, …, N_t\) (where \(N_t\) denotes the number of trials) and the trial, k, for which we reconstructed the music played to the participant via our decoding model.

We then order the set of structural similarity measures \(C = {C_{k,i}}~\forall i \in 1,…,N_t\) and identify the position of \(C_{k,k}\) in this ordered list in order to measure the rank accuracy of trial k. Rank accuracy measures the normalised position of \(C_{k,k}\) in the list and is equal to 0.5 under the null hypothesis that the music cannot be identified. In other words rank accuracy measures the ability of our decoder to correctly decode our music by measuring how similar the decoded and original music are to one another compared to the similarity between the decoded music and all other possible pieces of music. Finally, we measure the statistical significance of our rank accuracy via the method described by Ref.77.

Effect of tempo

A number of studies have reported significant effects of music tempo on the EEG39,40,41,42. Therefore, we investigate whether the tempo of the music played to participants significantly effects the performance of our decoding model.

Specifically, we estimate the range of tempos within each 40 s long piece of music stimuli and the corresponding mean tempo. We then test whether the mean tempo of the music significantly effects the performance of our decoding model by measuring the Pearson’s correlation coefficient between the mean tempo of the music played to the participant within each trial and the corresponding rank accuracy measure of the decoders performance for that same trial. Additionally, we also measure the likelihood that the mean tempo for the music within a single trial was drawn from the distribution of mean tempos over all trials. This allows us to estimate whether the tempo of the music within a trial is ‘typical’ or less ‘typical’. We measure the correlation between this measure of the typicality of the tempo of the music and the performance of the decoder on this trial to identify whether trials with unusual tempos (faster or slower than usual) are classified more (or less) accurately.

In both cases we hypothesise that if our decoder is predominately making use of the tempo of the music there will be significant correlations between the decoder’s performance and either the tempo of the music or the likelihood (typicality) of the tempo of the music.

Confound consideration

The use of headphones to play music to participants presents one potential confounding factor in our analysis. Although the headphones we used were electromagnetically shielded in a way that is suitable for use within an fMRI scanning environment there is a possibility that their proximity to the EEG electrodes lead to some induced noise in the recorded EEG signals. This noise could either be electromagnetic noise from the electrical operation of the headphones of vibrotactile from the vibration of the headphones.

We expect that if this is the case the noise removal applied to the EEG should remove this noise. Indeed, our visual inspection of our cleaned EEG signals did not reveal any apparent induced noise. However, we cannot discount the possibility that some residual noise from the headphones (either electromagnetic or vibrotactile in nature) remains in the EEG signal and that this is used as part of the decoding process.

The only way to verify that this was not the case is to attempt to repeat the experiment without the use of headphones. Therefore, we make use of another dataset recorded by our team78 using conventional speakers placed over 1 m away from participants to play similar pieces of music. This dataset contains just EEG recorded from participants while they listened to similar sets of synthetic music stimuli in a separate experiment. As this dataset only contains EEG data participant specific fMRI-informed source analysis is not possible. Instead, we use the averaged fMRI results from all our participants in our EEG-fMRI experiments to provide an averaged head model and averaged source dipole locations for the fMRI-informed source analysis step in our decoding pipeline.

We first detail this dataset and then go on to describe how we adapted our analysis pipeline to attempt to decode music played to participants in this experiment.

Dataset

Our EEG only dataset was originally recorded as part of a set of experiments to develop an online brain-computer music interface (BCMI). These experiments, their results, and the way the dataset is recorded are described in detail in Ref.78. We also describe the key details here.

A cohort of 20 healthy adults participated in our experiments. EEG was recorded from each participant via 32 EEG electrodes positioned according to the international 10/20 system for electrode placement at a sample rate of 1000 Hz.

Participants were invited to participate in multiple sessions to first calibrate, then train, and finally to test the BCMI. For our purposes in this present study we only use the EEG data recorded from participants during the calibration session.

In the calibration session a series of synthetic music clips were played to participants. Each clip was 20 s long and contained pre-generated piano music. The music was generated by the same process used for our EEG-fMRI experiments (see the “Stimuli” section). A total of 90 unique synthetic music clips were played to the participants in random order. Each clip was generated for the purpose of the experiment (ensuring the participants had never heard the clip before) and targeted a specific affective state. Participants were instructed to report their current felt affect as they listened to the music using the FEELTRACE interface in a similar way to the joint EEG-fMRI experiments described above.

Details of the dataset and accompanying stimuli are described in Refs.61,78. The data is also published in Ref.79.

Ethics

Ethical permission for recording this second dataset was also granted by the University of Reading research ethics committee, where the study was originally conducted. All experimental protocols and methods were carried out in accordance with relevant ethical guidelines. Informed consent was obtained from all participants.

Analysis

Our decoding model is modified slightly to attempt to reconstruct the music played to participants in the EEG-only experiments. Specifically, we use the mean average of the fMRI results from our cohort of participants in our joint EEG-fMRI dataset to identify the set of voxels for use in our fMRI informed EEG analysis. Furthermore, our head model used in the fMRI-informed EEG source analysis step in our decoding model is constructed from an averaged MRI anatomical scan provided within SPM1267.

All other stages of our decoding model and analysis pipeline—including EEG source localisation, biLSTM network structure, and statistical analysis—are the same.